by Cathy Li and Farah Lalani*

The pandemic highlighted the importance of online safety, as many aspects of our lives, including work, education, and entertainment became fully virtual. With more than 4.7 billion internet users globally, decisions about what content people should be able to create, see, and share online had (and continues to have) significant implications for people across the world. A new report by the World Economic Forum, Advancing Digital Safety: A Framework to Align Global Action, explores the fundamental issues that needs to be addressed:

-How should the safety of digital platforms be assessed?

-What is the responsibility of the private and public sectors in governing safety online?

-How can industry?wide progress be measured?

While many parts of the world are now moving along a recovery path out of the COVID-19 pandemic, some major barriers remain to emerge from this crisis with safer societies online and offline. By analysing the following three urgent areas of harm we can start to better understand the interaction between goals of privacy, free expression, innovation, profitability, responsibility, and safety.

Health misinformation

One main challenge to online safety is the proliferation of health misinformation, particularly when it comes to vaccines. Research has shown that a small number of influential people are responsible for the bulk of anti-vaccination content on social platforms. This content seems to be reaching a wide audience. For example, research by King’s College London has found that one in three people in the UK (34%) say they’ve seen or heard messages discouraging the public from getting a coronavirus vaccine. The real-world impact of this is now becoming clearer.

Research has also shown that exposure to misinformation was associated with a decline in intent to be vaccinated. In fact, scientific-sounding misinformation is more strongly associated with declines in vaccination intent. A recent study by The Economic and Social Research Institute’s (ESRI) Behavioural Research Unit, found people who are less likely to follow news coverage about COVID-19 are more likely to be vaccine hesitant. Given these findings, it is clear that the media ecosystem has a large role to play in both tackling misinformation and reaching audiences to increase knowledge about the vaccine.

This highlights one of the core challenges for many digital platforms: how far should they go in moderating content on their sites, including anti-vaccination narratives? While private companies have the right to moderate content on their platforms according to their own terms and policies, there is an ongoing tension between too little and too much content being actioned by platforms that operate globally.

This past year, Facebook and other platforms made a call to place an outright ban on misinformation about vaccines and has been racing to keep up with enforcing its policies, as is YouTube. Cases like that of Robert F Kennedy Junior, a prominent anti-vaccine campaigner, who has been banned from Instagram but is still allowed to remain on Facebook and Twitter highlight the continued issue. Particularly troubling for some critics is his targeting of ethnic minority communities to sew distrust in health authorities. Protection of vulnerable groups, including minorities and children, must be top of mind when considering balancing free expression and safety.

Child exploitation and abuse

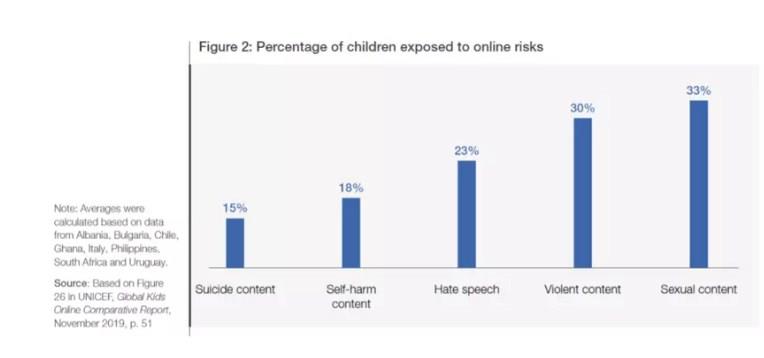

Other troubling activity online has soared during the pandemic: reports showed a jump in consumption and distribution of child sexual exploitation and abuse material (CSEAM) across the web. With one in three children exposed to sexual content online, it is the largest risk kids face when using the web.

Given the role of private messaging, streaming, and other digital channels that are used to facilitate such activity, the tension between privacy and safety needs to be addressed to solve this issue. For example, encryption is a tool that is integral to protecting privacy, however, detecting illegal material by proactively scanning, monitoring, and filtering user content currently cannot work with encryption.

Recent changes to the European commission’s e-privacy directive requiring stricter restrictions on the privacy of message data, resulted in a 46% fall in referrals for child sexual abuse material coming from the EU; this occurred in only the first three weeks since scanning was halted by Facebook. While this law has since been updated, it is clear that tools, laws, and policies designed for greater privacy can have both positive and negative implications to different user groups from a safety perspective. As internet usage grows, addressing this underlying tension between privacy and safety is more critical than ever before.

Violent extremism and terrorism

The pandemic exposed deep-seated social and political divides which reached breaking point in 2021 as seen in acts of terrorism, violence, and extremism globally. In the US, the 6th January Capitol Insurrection led to a deeper look at how groups like QAnon were able to organize online and necessitated a better understanding of the relationship between social platforms and extremist activity.

Unfortunately, this is not a new problem; a report by The New Zealand Royal Commission highlighted the role of YouTube in the radicalization of the terrorist who killed 51 people during Friday prayers at two mosques in Christchurch in 2019. Footage of this attack was also streamed on Facebook Live and in the 24 hours after the attack, the company scrambled to remove 1.5 million videos containing this footage.

The role of smaller platforms is also highlighted in the report, citing the terrorist’s engagement with content promoting extreme right-wing and ethno-nationalist views on sites like 4chan and 8chan. Some call for a larger governmental role in addressing this issue whilst others highlight the risk of governments abusing the expanded power. Legislation requiring companies to respond to content takedown requests adds complexity to the shared responsibility between the public and private sectors.

When legislation such as Germany’s Network Enforcement Act (NetzDG) demands quicker action by the private sector, potential issues of accuracy and overreach arise, even if speed may be beneficial given the (often) immediate impact of harmful content. Regardless of whether future decisions related to harmful content are determined more by the public or private sector, the underlying concentration of power requires checks and balances to ensure that human rights are upheld in the process and in enacting any new legislation.

So, what do these seemingly disparate problems have in common when it comes to addressing digital safety? They all point to deficiencies in how the current digital media ecosystem functions in three key areas:

1. Deficient thresholds for meaningful protection

Metrics currently reported on by platforms, which focus largely on the absolute number of pieces of content removed, do not provide an adequate measure of safety according to a user’s experience; improvements in detecting or enforcing content policies, changes in these policies and content categories over time, and actual increases in the harmful content itself are not easily dissected. Even measures such as “prevalence,” defined as user views of harmful content (according to platform policies) as a proportion of all views, does not reflect the important nuance that certain groups are more targeted on platforms based on their gender, race, ethnicity and other factors tied to their identity (and therefore more exposed to such harmful content).

Measures that go beyond the receiving end of content (e.g. consumption) to highlight the supply side of the information could help; metrics, such as the top 10,000 groups (based on members) by country or top 10,000 URLs shared with the number of impressions, could shed light on how, from where and by whom harmful content first originates.

2. Deficient standards for undue influence in recommender systems

COVID-19 has highlighted issues in automated recommendations. A recent audit of Amazon recommendation algorithms shows that 10.47% of their results promote “misinformative health products,” which were also ranked higher than results for products that debunked these claims; clicks on a misinformative product also tended to skew later search results as well. Overarchingly, when it comes to recommended content, it is currently unclear if and how such content is made more financially attractive through advertising mechanisms, how this relates to use of personal information, and whether there is a conflict of interest when it comes to user safety.

3. Deficient complaint protocols across private-public lines

Decisions regarding content removal, user suspension, and other efforts at online remedy can be contentious. Depending on who one asks, sometimes they may go too far and other times not far enough. When complaints are made internally to a platform, especially ones with cascading repercussions, what constitutes a sufficient remedy process, in terms of the time it takes to resolve a complaint, accuracy in the decision according to stated policies, accessibility of redress, and escalations/appeals when the user does not agree with the outcome? Currently, baseline standards for complaint protocols through industry KPIs or other mechanisms to gauge effectiveness and efficiency do not exist and therefore the adequacy of these cannot be assessed.

New framework and path forward

In collaboration with over 50 experts across government, civil society, academia, and business, the World Economic Forum has developed a user-centric framework, outlined in the new report with minimum harm thresholds, auditable recommendation systems, appropriate use of personal details, and adequate complaint protocols to create a safety baseline for use of digital products and services.

While this is a starting point to guide better governance of decisions on digital platforms impacting user safety, more deliberate coordination between the public and private sector is needed – today, the launch of the newly formed Global Coalition for Digital Safety aims to accomplish this very goal.

*Head of Media, Entertainment& Sport Industries, World Economic Forum and Community Curator, Media, Entertainment& Information Industries, World Economic Forum

**first published in: www.weforum.org

By: N. Peter Kramer

By: N. Peter Kramer